When Science Pushes Hardware to Its Limits

Scientific computing has never been gentle on machines. Every time researchers run a global climate model, map genetic codes, or simulate molecular behavior, the system behind the scenes faces brutal demands. Multi-GPU nodes hum under full load for days, consuming kilowatts of power and generating enough heat to warm a small office.

To put that into perspective: a single high-resolution climate simulation can run for 48+ hours with GPUs operating at 90–95% utilization. Genomic sequencing often sustains 1.2 kW per node for 36 hours straight. At that level, the biggest threat isn't code efficiency — it's heat.

| Simulation Type |

Average GPU Load |

Power Consumption |

Runtime |

| Climate Modeling |

90–95% |

1500W |

48+ hours |

| Genetic Simulation |

85–92% |

1200W |

36+ hours |

| Molecular Dynamics |

88–94% |

1350W |

40+ hours |

Even a slight imbalance in airflow can push GPU temperatures 10°C higher — enough to trigger throttling, slow performance, or worse, an unexpected crash halfway through a week-long run.

The Silent Partner in Every Breakthrough

When people talk about performance, they usually mean processors and graphics cards. But ask anyone who’s built or maintained a research cluster, and they’ll tell you — the chassis quietly makes or breaks everything.

A good GPU server case doesn’t just hold components together. It shapes how air moves, how noise travels, and how efficiently heat escapes. In high-density environments, where every degree matters, this hidden engineering becomes the difference between sustained performance and system fatigue.

At OneChassis, we’ve spent years studying that invisible flow. Our engineers often say, “air behaves like data — if it meets resistance, it slows everything down.” That’s why we design cases where airflow paths are clean, fan pressure zones are balanced, and each GPU bay receives steady cooling instead of turbulence. The result is simple: systems breathe easier and perform longer.

“After we swapped to a better-engineered chassis, our simulations stopped timing out. It wasn’t the code — it was the airflow.” — HPC Researcher, Applied Physics Lab

Engineering Details That Matter More Than You Think

1. Thermal Management

Temperature is a quiet saboteur. Modern GPU cases must handle both air and liquid cooling with precision. Hot-swap fans, front-to-rear airflow, and temperature-responsive control modules keep the system cool even under nonstop simulation loads. OneChassis integrates adaptive fan zoning and dust-resistant filters, allowing labs to maintain performance with minimal interruptions.

2. Scalability

Scientific workloads evolve as fast as discovery itself. A modular case lets labs grow from 2 GPUs to 8 or even 10 without redesigning the whole rack. Proper PSU alignment and cable routing prevent signal interference — a small detail that can save hours of debugging in multi-node setups.

3. Reliability & Maintenance

Shared research environments demand reliability, not fuss. Tool-less panels, quick access to filters, and EMI shielding protect the integrity of sensitive data. It’s the kind of design that fades into the background — until the day it prevents a crash that could’ve cost weeks of work.

| Design Feature |

Impact on Research |

Typical Use Case |

| Liquid Cooling |

20–30% longer stable runtime |

Climate & fluid dynamics |

| Modular Layout |

Easier system upgrades |

AI and HPC labs |

| EMI Shielding |

Prevents data corruption |

Genomics & molecular modeling |

When Smart Design Accelerates Discovery

A few years ago, a European climate institute battled constant GPU throttling during week-long simulations. After upgrading to a pressure-balanced GPU chassis co-developed with OneChassis, their thermal delta dropped by nearly 12°C. Simulation times fell by 18%, and uptime reached a record 72 hours without a single GPU error.

Meanwhile, a genomic research lab in Asia ran into another familiar problem — spontaneous shutdowns mid-sequencing. Switching to a liquid-ready chassis with isolated GPU and CPU zones stabilized core temperatures and brought their failure rate down to zero.

| Before / After |

Old Setup |

Upgraded Case |

| Avg GPU Temp (°C) |

84 |

72 |

| Simulation Uptime (hrs) |

22 |

36+ |

| Failure Rate (%) |

7.50% |

0% |

These aren’t just engineering tweaks — they’re proof that hardware design can amplify discovery. When airflow, acoustics, and accessibility align, performance follows naturally.

Choosing the Right Case for Your Research

When you’re investing in infrastructure meant to run for years, the right chassis is not an accessory — it’s an insurance policy. Here’s a simple checklist many research teams use before choosing:

✅ Match the case’s airflow capacity to your total GPU TDP

✅ Check rack compatibility and lab noise tolerance

✅ Ensure modularity for future GPU or PSU upgrades

✅ Verify EMI shielding and dust-proofing

✅ Consider power efficiency and redundancy for 24/7 uptime

And remember: true value isn't just in the specs sheet. It’s in the hours of uninterrupted data crunching and the peace of mind that comes with stability.

“Saving a few hundred dollars on a case can cost thousands in downtime.” — HPC Systems Engineer, Singapore

At OneChassis, our design philosophy centers on this long-term perspective — building enclosures that grow with your workloads and protect the investment that drives your research forward.

Explore more GPU Chassis solutions→

Cooler Systems, Clearer Science

In research, performance isn’t only about raw power. It’s about consistency, balance, and endurance — the ability to run for days, quietly and reliably, without breaking a sweat.

A well-engineered GPU server case transforms hardware chaos into harmony. It turns airflow into confidence, temperature control into uptime, and noise reduction into focus.

At OneChassis, we believe every discovery deserves a stable foundation — because behind every breakthrough, there's a system that kept its cool.

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

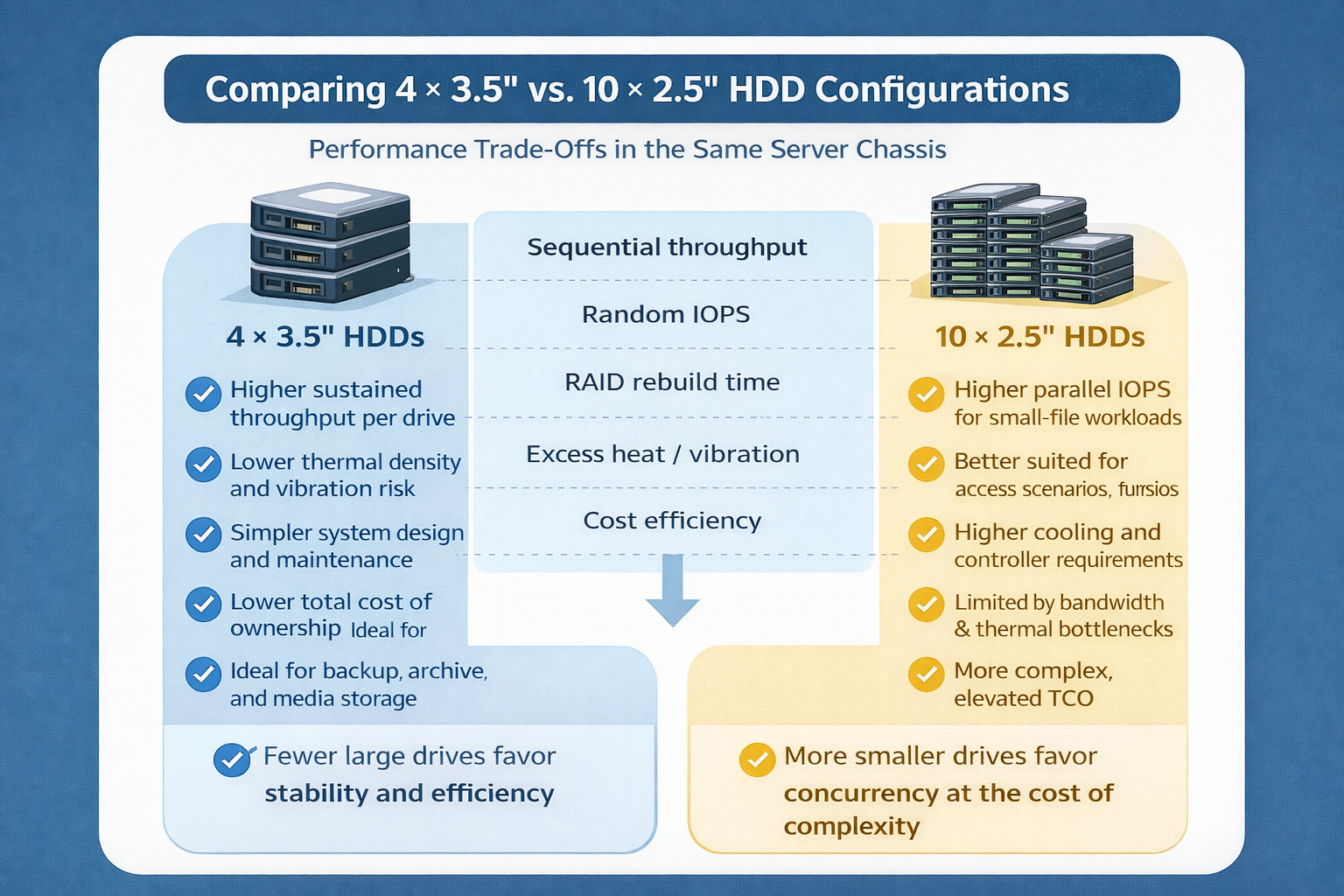

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

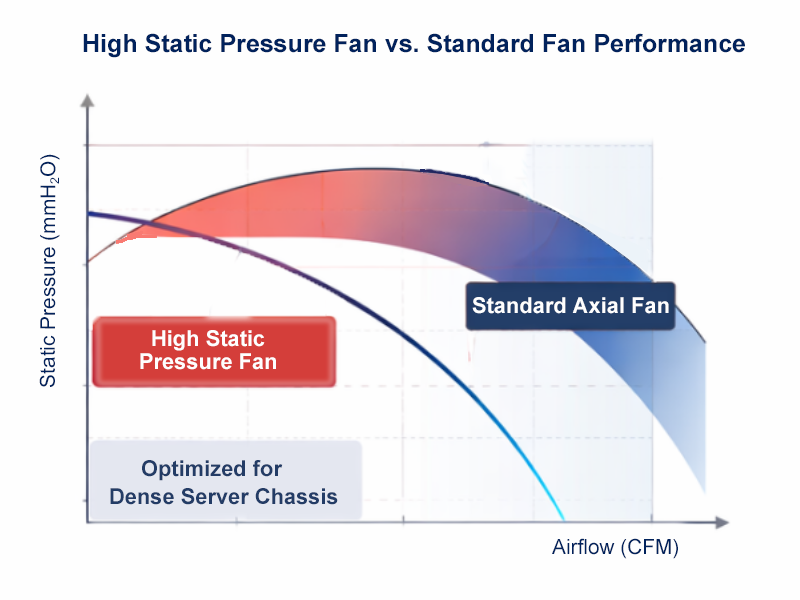

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones