AI Computing Server Chassis OEM/ODM Wholesale: Powering the AI Revolution

GPU Server Case Heat Management: Why It Matters

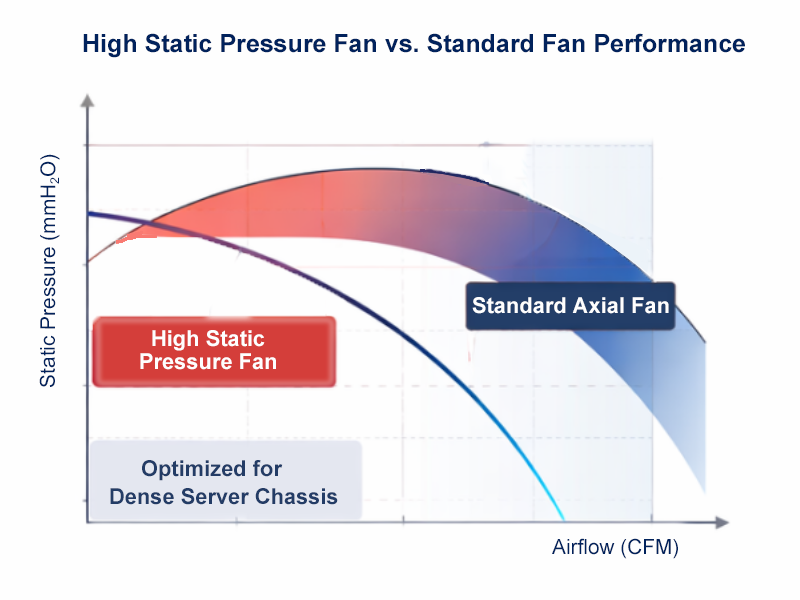

- High static pressure fans

- Smart fan zoning

- Honeycomb ventilation panels

- Optional liquid cooling for extreme builds

Heat Optimization Comparison

| Feature | Traditional Chassis | OneChassis GPU Case |

|---|---|---|

| Airflow | Basic front-to-back | Zoned high-velocity |

| GPU Cooling Support | Limited | Up to 8 double-width GPUs |

| Liquid Cooling Ready | Rare | Yes (optional) |

| Fan Control | Manual or fixed RPM | Smart PWM |

Scalable Architecture for Future-Proof Deployments

One size doesn’t fit all in AI computing. You need a modular, flexible structure that lets you adapt as your workloads change. That’s where chassis compatibility makes a real difference.

The Storage Server Chassis and Dual-Node Server options from OneChassis support:

- ATX / E-ATX / custom-size boards

- Vertical or horizontal GPU layouts

- NVMe and SATA hot-swap bays

- Expansion slots for FPGA, DPU, and AI accelerators

Whether you're fine-tuning inference models or training large LLMs, we help you get the structure right from day one.

Customization That Works for You

OEM/ODM isn’t just slapping a logo on a box. It's about real customization that fits your workloads.

Your tweak is highly supported:

- I/O layout for edge deployment

- GPU slot placement for optimal airflow

- Rail and handle systems for easy data center integration

- Logo printing, metal color, and even the front panel look

Your brand, your layout, your choice. That’s how OEM/ODM wholesale should work.

Built for AI, From Edge to Cloud

AI isn't confined to the cloud anymore. We're seeing rising demand for edge-ready AI systems, especially in:

- Video surveillance

- Smart factories

- Retail analytics

- Industrial automation

OneChassis supports ultra-dense builds in 2U and 4U formats. Some of our partners deploy 10+ GPUs in edge locations with NAS Server Chassis for localized storage crunching.

AI Use Case Scenarios

| Deployment Type | Use Case | Recommended Chassis |

|---|---|---|

| Data Center | Model training, inference server | GPU Server Case |

| Edge AI | Smart camera hubs, factory AI box | Dual-Node Server |

| Cloud Hybrid | Multi-tenant GPU allocation | Liquid Cooling AI Multi-GPU Server |

| NAS + AI | Storage-heavy AI use | NAS Server Chassis |

Why OEM/ODM Matters in Today’s AI Game

Time-to-market is everything. With platforms like NVIDIA MGX setting reference standards, OEM/ODM partners like OneChassis help you:

- Launch custom AI products faster

- Cut R\&D and tooling costs

- Focus your engineering time on your IP—not hardware headaches

And yeah, wholesale pricing doesn’t hurt either.

Wrapping Up: What to Look for in an AI Chassis Supplier

Here’s the takeaway. When you’re choosing a partner for AI server chassis, don’t settle for generic. Go for:

- Thermal intelligence: Your GPUs will thank you.

- Modular flexibility: Because your stack will change.

- Deep customization: Not just looks—real structural tweaks.

- Application-specific design: Edge ≠ Cloud. Build accordingly.

- Scalable pricing: Especially for bulk and long-term deals.

OneChassis delivers all of this, backed by years of experience in OEM/ODM projects for global clients across AI, HPC, and enterprise tech. Check out our full GPU Server Case lineup or explore Dual-Node Servers for your next AI infrastructure project.

Let us know if you'd like a customized comparison or OEM quote template to support your project planning. Your AI ambitions are entitled to the most effective structure.

Ready to build smarter?

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

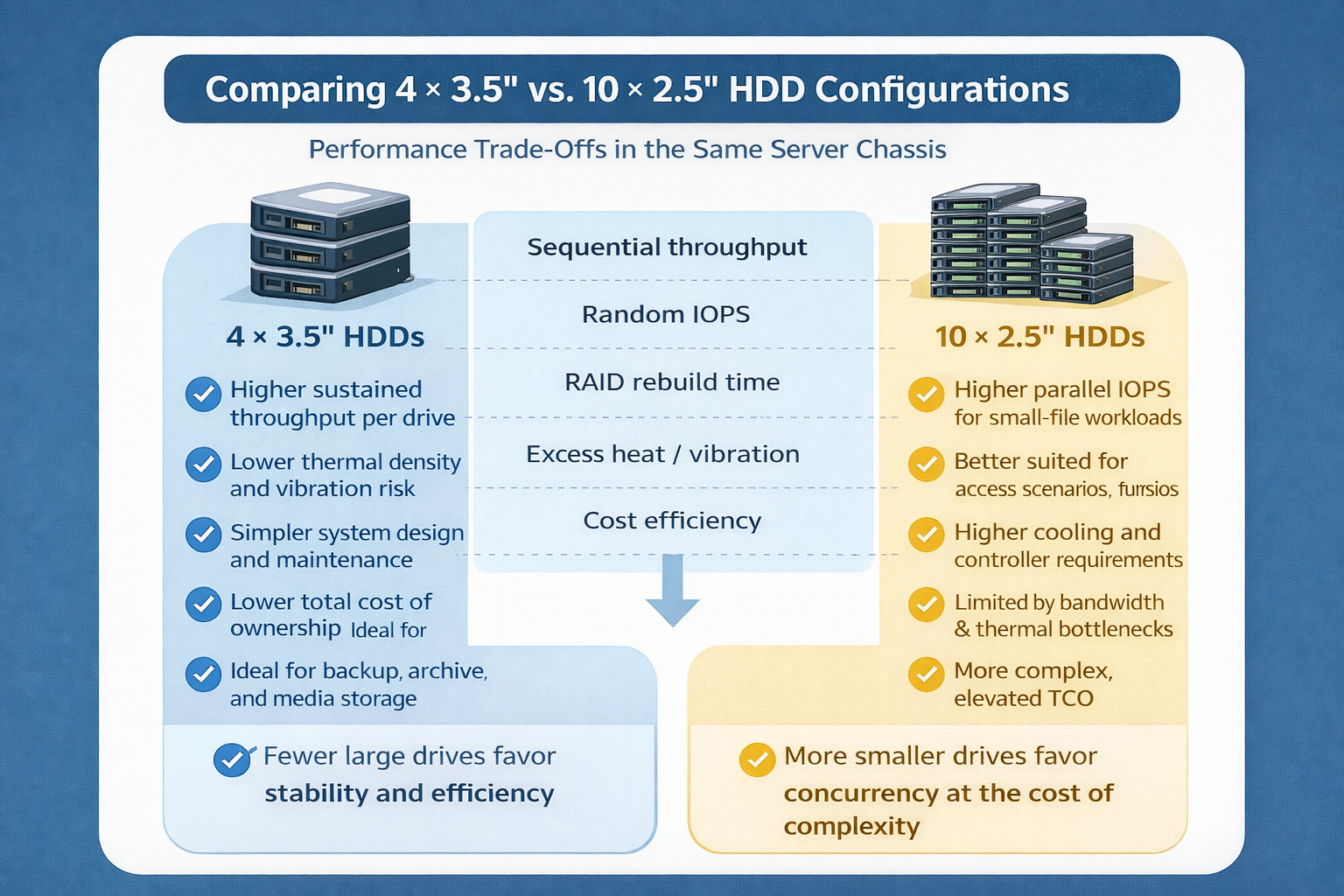

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones