AI model training, 3D rendering, data analytics—none of these workloads wait around for slow hardware. Today’s performance race is all about multi-GPU setups. But it’s not just about adding more graphics cards—it’s about creating a chassis environment that keeps them cool, stable, and talking to each other efficiently.

Why Multi-GPU Servers Are Taking Off

From deep learning to simulation, modern workloads thrive on parallel computing. Instead of one GPU doing it all, servers now run 4, 8, or even 16 GPUs to accelerate massive data tasks.

| Configuration |

GPU Count |

Performance Gain |

Typical Use Case |

| Entry-Level Workstation |

2 |

1.8× |

AI inference / design |

| Mid-Tier Server |

4 |

3.6× |

Model training / CAD |

| High-Density Server |

8 |

6.5× |

Deep learning / sim |

| HPC Cluster Node |

16 |

12× |

Research / data center |

According to NVIDIA, workloads that once took weeks on one GPU can now finish in under 24 hours with eight. But scaling GPUs also scales the challenges—heat, power, and airflow.

Challenges of Heat, Power, and Space

Each high-end GPU can draw up to 700W under full load. Multiply that by eight, and you’re looking at 5,000+ watts of heat. Without smart cooling, even top-tier GPUs throttle fast.

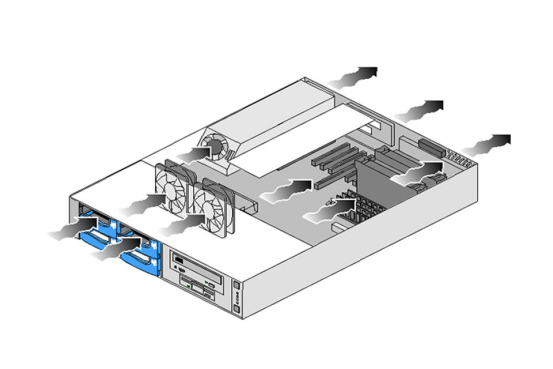

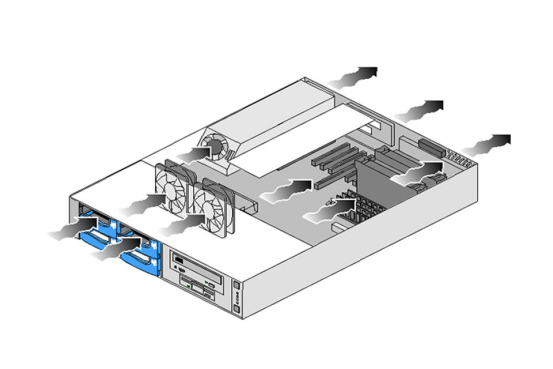

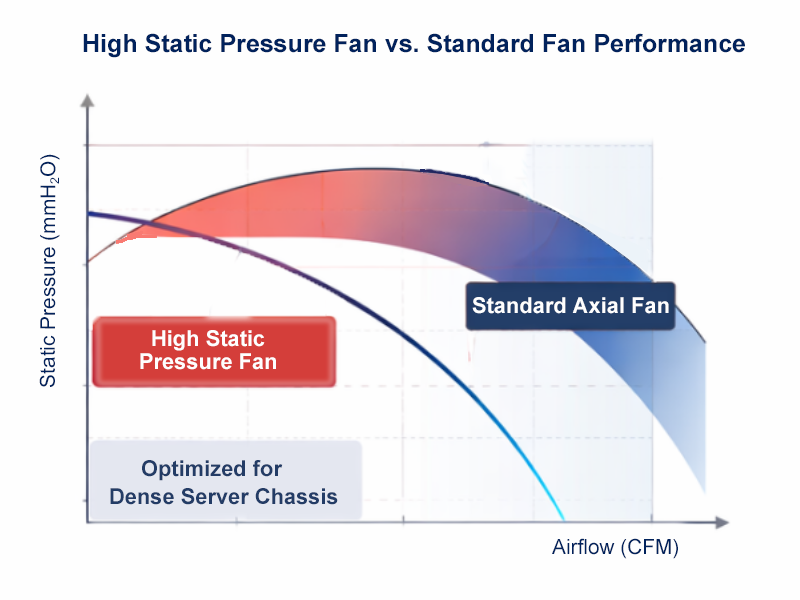

That’s why airflow design defines real performance. At OneChassis, we use modular fan walls and optimized air tunnels that maintain even airflow across all GPUs. Depending on the build, our cases can fit dual 120mm or triple 80mm high-pressure fans, balancing thermal performance and space efficiency.

Air Cooling vs. Liquid Cooling

Air cooling remains the go-to for most servers, but liquid and hybrid systems are catching up especially in GPU-dense racks.

Liquid-assisted designs can cut GPU temps by up to 20%, boosting longevity and stability. That’s why we are developing modular layouts that support both cooling modes, helping customers upgrade seamlessly as density rises.

Smart Layouts and Scalability

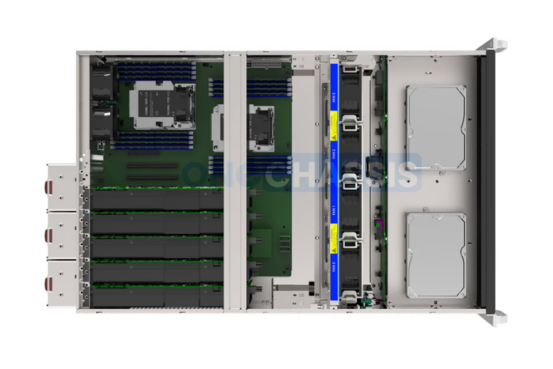

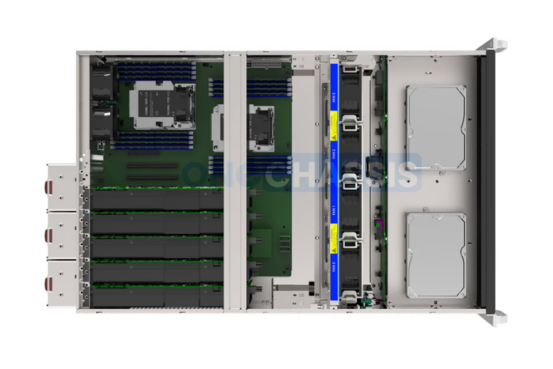

The chassis layout determines how GPUs communicate and stay cool. Modern designs align GPUs around PCIe or NVLink lanes to reduce latency, with full-length GPU support, riser flexibility, and isolated airflow zones.

OneChassis platforms scale easily between 4-GPU and 8-GPU configurations, so upgrading your compute doesn’t mean rebuilding your setup.

What’ s Inside an AI Training Server?

| Component |

Specification |

Notes |

| GPU |

8× NVIDIA H100 80GB |

Linked via NVLink |

| CPU |

Dual AMD EPYC 9654 |

192 cores total |

| Memory |

2TB DDR5 ECC |

High throughput for AI workloads |

| Cooling |

6× 120mm PWM fans |

Balanced static pressure |

| Power |

2× 3000W redundant PSU |

80 PLUS Titanium efficiency |

| Case |

4U GPU chassis |

Modular airflow & hot-swap fans |

This setup delivers 1.5 PFLOPS (FP16) performance. But it only stays stable thanks to case-level details like anti-vibration mounts and rigid PCIe supports, which is the standard in every OneChassis design.

Power Delivery Backbone

With multiple GPUs pulling hundreds of watts each, clean power delivery is crucial. Poor cable routing can easily block airflow or cause instability.

Our chassis use integrated power backplanes, reinforced channels, and redundant PSU bays to keep power balanced and cables tidy for maximum efficiency.

Rack-Level Efficiency

At rack scale, small design details matter. Optimized 4U and 5U cases stack efficiently, maintain airflow alignment, and simplify maintenance.

Some of our clients deploy 10 GPU servers per rack, each with adaptive fan control and 3000W PSU redundancy, delivering dense compute power without the heat headaches.

Why the Chassis Itself Impacts GPU Performance

A poorly ventilated case can cut 10–15% of GPU output due to throttling. Conversely, a precision-engineered chassis like those from OneChassis keeps GPUs at max boost clocks longer, directly improving ROI. That’s why leading AI labs and rendering studios see the chassis as a performance enabler, not just a container.

Modular, Smarter GPU Chassis in the future

Next-gen chassis designs are becoming modular and intelligent:

· Smart fan control by temperature zones

· Tool-less GPU swaps

· Interchangeable air/liquid cooling

· Real-time thermal sensors

These are features already shaping our new OneChassis lineup built for the AI computing era.

Multi-GPU systems are revolutionizing computing power—but the true enabler is the scalable chassis behind them.

Every airflow curve, fan mount, and cable route contributes to performance and lifespan. So if you’re planning your next build, don’t treat the case as a box—see it as your performance foundation.

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

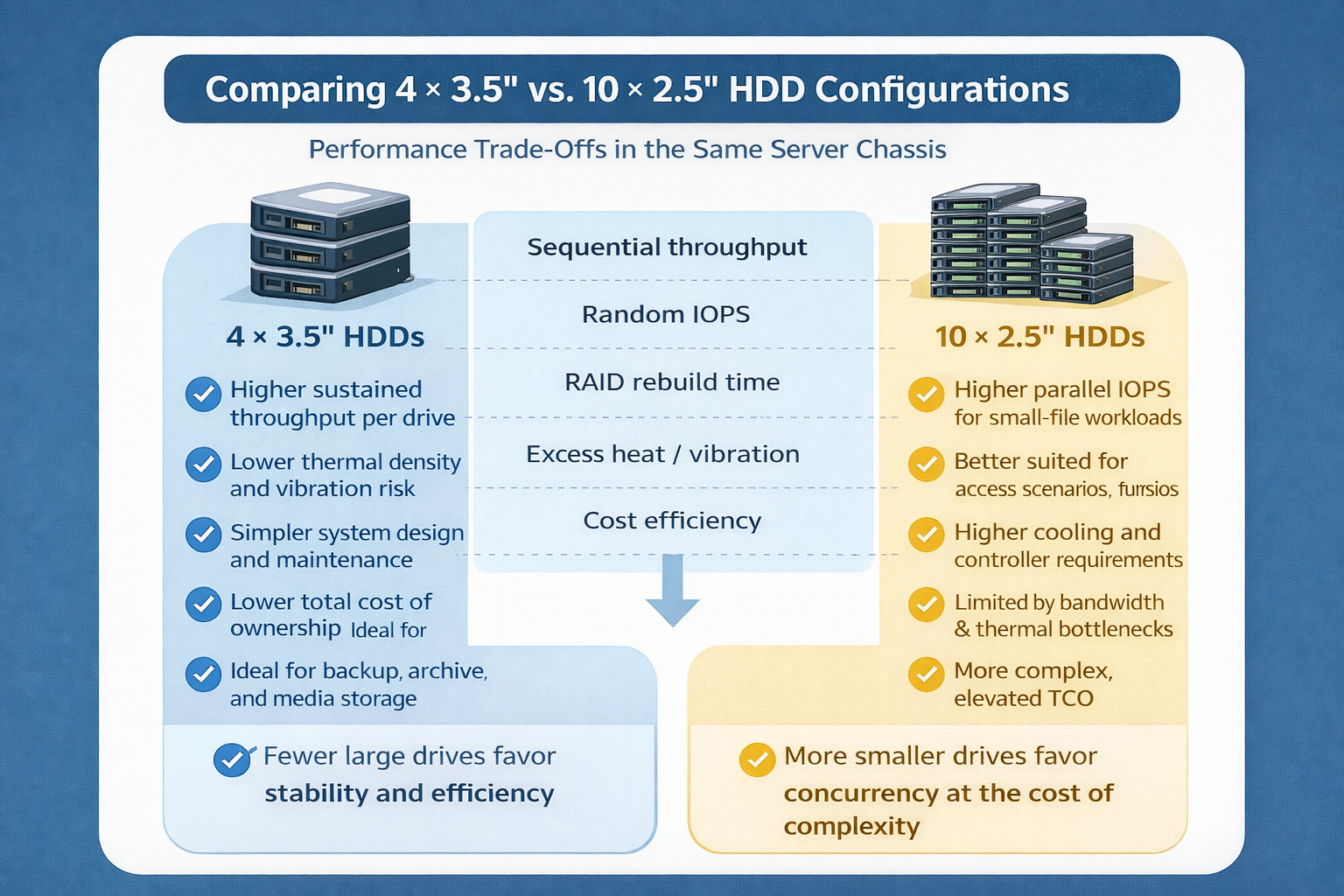

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones