Air Cooling v.s. Liquid Cooling

Cooling Strategies That Make or Break Performance

Keeping hardware cool isn’t just a technical detail—it’s the backbone of stable operations, whether you’re running a single workstation or a hyperscale data center. The real debate comes down to two camps: air cooling vs. liquid cooling. Both have their perks, but the right choice depends on workload intensity, rack density, and long-term operating costs.

Air Cooling – The Old Reliable

Air-based systems use fans and heat sinks to move heat away from CPUs, GPUs, and other hot spots.

Why it still works:

-

Cost-friendly entry point – no pumps, radiators, or tubing to worry about.

-

Straightforward setup – quick installs, predictable airflow patterns.

-

Good fit for lighter workloads – offices, industrial PCs, or mid-range server stacks.

Where it falls short:

-

Noise factor – those high-RPM fans can sound like a jet engine.

-

Bigger footprint – heat sinks and airflow space eat up rack real estate.

-

Thermal ceiling – once you hit high-density racks or overclocked chips, airflow alone can’t keep temps stable.

For many SMBs or system integrators, air is fine. But in dense server rooms, it’s often the bottleneck.

Liquid Cooling – The Performance Play

Liquid systems take thermal management up a notch, using coolant blocks, pumps, and radiators to absorb and move heat more efficiently than air ever could.

Why the industry is shifting:

-

Serious heat dissipation – transfer rates up to 100x more efficient than air.

-

Rack density win – cools more CPUs/GPUs in less space, perfect for HPC and AI training clusters.

-

Energy savings – less fan power, lower PUE, leaner total cost of ownership.

-

Quieter racks – pumps hum; fans roar. Guess which clients prefer.

Trade-offs to note:

-

Higher upfront cost.

-

Maintenance checks for leaks and fluid levels.

-

Requires planning, but the ROI pays back fast in dense data centers.

Hybrid setups are also gaining ground—air handles the basics, liquid takes care of hotspots.

| Factor | Air Cooling | Liquid Cooling |

|---|---|---|

| Noise | High fan noise | Low, pump-driven |

| Footprint | Larger, bulky heat sinks | Compact, higher density |

| Thermal Limit | Moderate workloads | Heavy loads / HPC |

| Cost Curve | Cheaper upfront | Better long-term ROI |

| Maintenance | Dust cleaning, minimal parts | Leak checks, fluid upkeep |

Where OneChassis Adds Value

At OneChassis, we don’t just ship cases—we design thermal-first GPU server chassis built to handle both cooling methods. Whether you’re sticking with traditional airflow or deploying liquid-cooled racks for AI and HPC, our chassis lines are optimized for:

-

Scalable rack density without overheating.

-

Lower TCO by maximizing airflow paths and liquid-ready layouts.

-

Future-proof designs that fit both conventional and cutting-edge cooling solutions.

If uptime, density, and performance matter, the chassis you choose is just as critical as the cooling system inside it. Air keeps things simple. Liquid pushes the limits. Here we give you the right foundation for either.

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

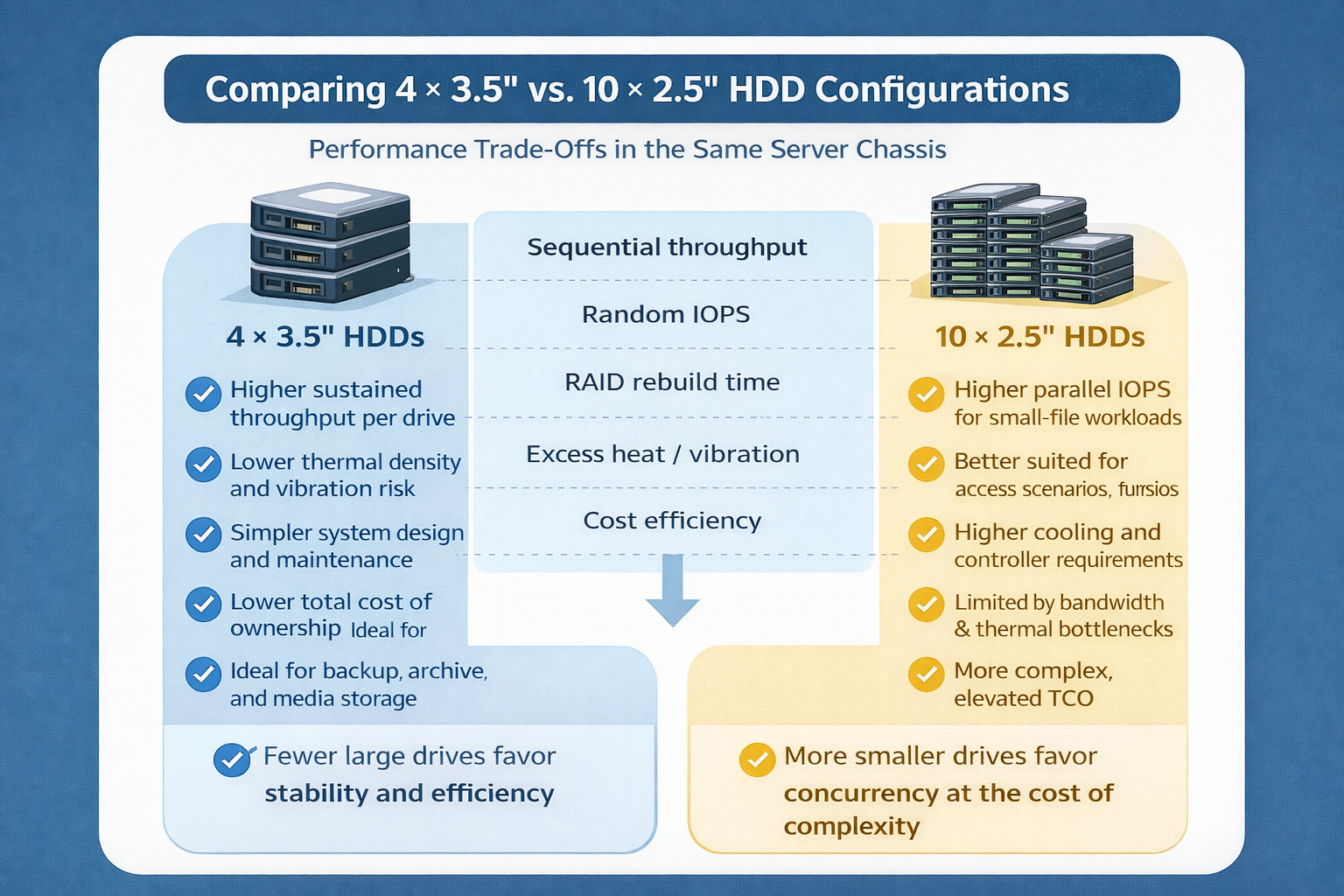

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

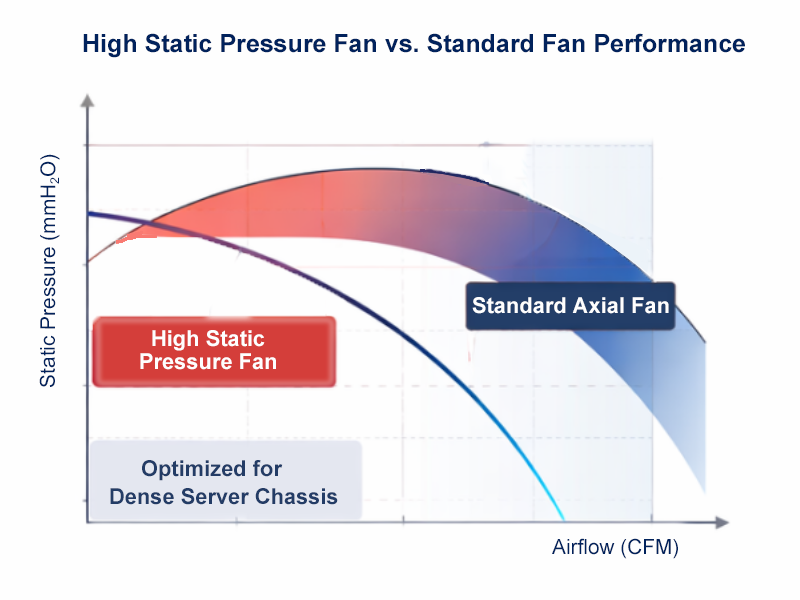

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones