GPU Server Chassis: The Backbone Behind Real Compute Power

Views : 268

Update time : 2025-12-02 11:42:00

Most people think a GPU server chassis is just a metal shell. But in real HPC, ML, and large-scale compute clusters, the enclosure is the quiet workhorse that keeps everything stable, cool, and running at full throttle. It’s not “a box” — it’s infrastructure. And for teams that can’t afford downtime, thermal drift, or noisy power rails, a purpose-built chassis is as critical as the GPUs themselves. This is where OneChassis steps in: engineered airflow, solid power paths, and layouts tuned for heavy workloads.

In high-density compute environments, the chassis decides whether your GPUs run at 100% or choke under load. A suitable GPU server chassis delivers:

- Smart thermal paths that stop throttling

- Stable power for multi-GPU arrays

- Reinforced frames that cut vibration noise

- Tight cable and I/O routing that reduces latency

- Density rack-friendly that avoids wasted space

> What Actually Keeps the System Stable

Thermal Control That Doesn’t Guess

Dense GPU stacks push brutal heat. You get thermal dips, shortened component life, & surprise reboots, without managed airflow. A real GPU chassis uses high-pressure fan walls, ducted air channels, and liquid-loop support to keep temps flat under 24/7 load.

Power That Doesn’t Flinch

Multiple GPUs can spike power hard. Cheap cases buckle. Purpose-built ones pack redundant PSUs, beefy busbars, and routed cabling that stops voltage sag during peak cycles.

Mechanical Strength That Actually Matters

Large racks vibrate — especially in mixed deployments. A flimsy frame transfers that vibration straight into your GPUs. A reinforced chassis kills that issue with stiffened rails and shock-dampened mounts.

I/O That Fits Real Workloads

When training clusters or real-time analytics depend on low-latency links, port layout matters. A good chassis gives direct, short paths for NICs, storage, and fabric links.

> Why Stability Equals Money

For HPC or finance, any jitter would hit the bottom line:

- Downtime = missed windows

- Throttling = longer training cycles

- Restarts = wasted compute hours

- Bottlenecks = slower insights than competitors

> What Sets GPU Chassis Apart

- Hot-swap fans and modular cooling zones

- Support for 4–10 and more GPUs in one unit

- Redundant power, modular trays, and replaceable blocks

- Growth-ready layouts you can scale later

> Quick Real-World Win

A global finance firm running fraud-scoring models started with generic servers. Under peak trades, the GPUs overheated, throttled, and delayed detection cycles —costing them real money.

After moving to dedicated GPU server chassis, it fixed real production pain:

- Uptime jumped 35%

- Latency in fraud scoring dropped

- Maintenance noise nearly vanished

> Seeing the Larger Strategy

Teams spend millions on GPUs but sometimes drop them into bargain-bin chassis. That’s like buying a supercomputer engine and mounting it in cardboard. The right GPU chassis:

- Protects ROI

- Cuts cooling bills

- Speeds up model training

- Improves competitive timing

- Reduces long-term TCO

It’s never “just a box”. It’s the foundation of every stable compute stack.

Stay Cool. Stay Stable. Stay in Control!

Related News

When AI Becomes A Threat, Infrastructure Becomes the Front Line

When AI Becomes A Threat, Infrastructure Becomes the Front Line

Jan 30,2026

When AI becomes a security risk, infrastructure becomes the front line. Learn how GPU server chassis design affects AI stability, security, and uptime—and how OneChassis builds reliable GPU platforms for enterprise AI workloads.

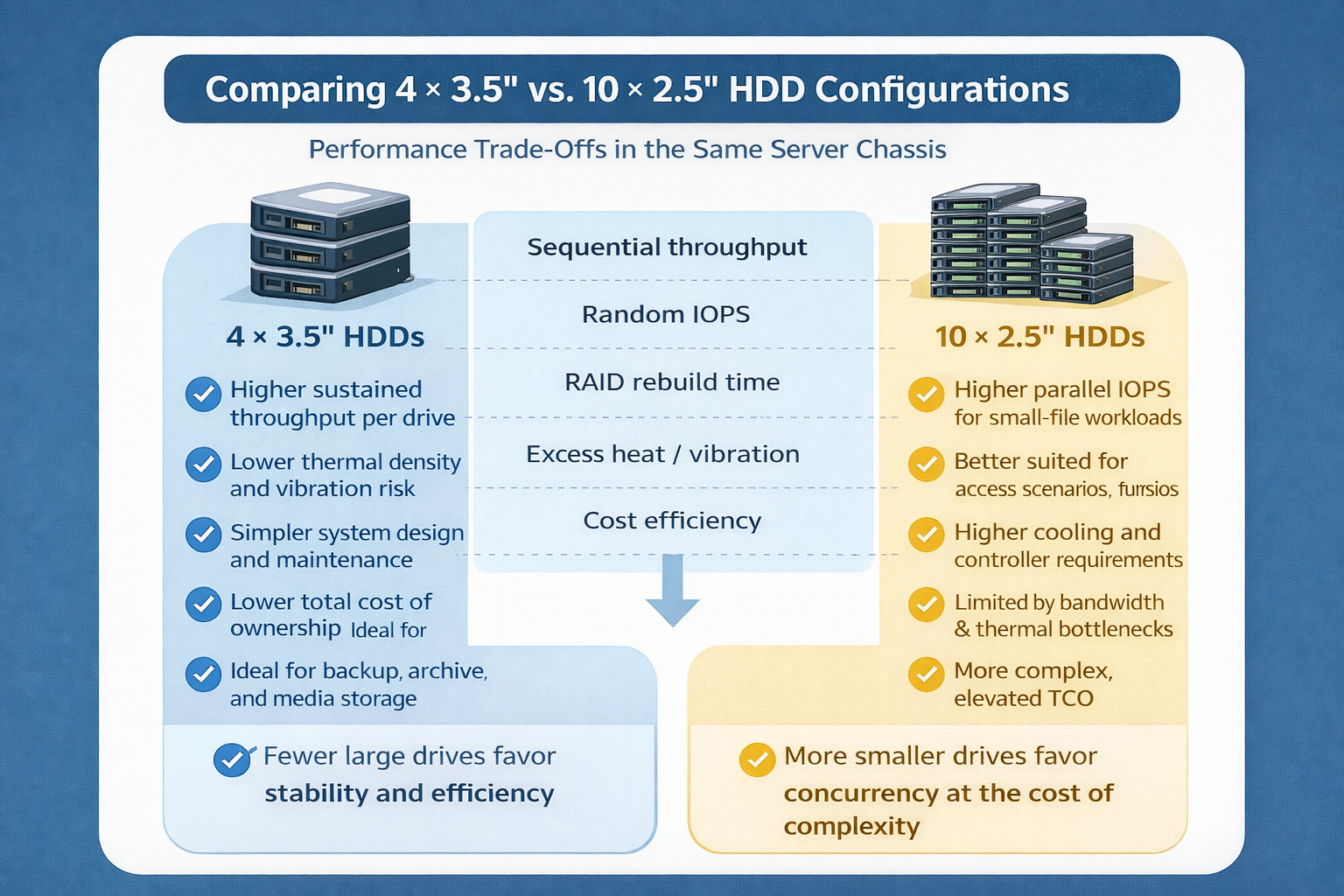

Storage Density Choices in the Same Server Chassis

Storage Density Choices in the Same Server Chassis

Jan 16,2026

When building a storage server, many users focus only on total capacity or drive count. In practice, the choice between fewer 3.5″ hard drives and more 2.5″ hard drives has a much deeper impact on performance, reliability, cooling efficiency, and long-term operating cost.

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Welcoming 2026: Building the Next Year of AI Infrastructure Together

Jan 01,2026

As we step into 2026, the global compute landscape continues to evolve at an unprecedented pace.

AI workloads are denser. GPU clusters are larger. Expectations for stability, efficiency, and scalability are higher than ever.

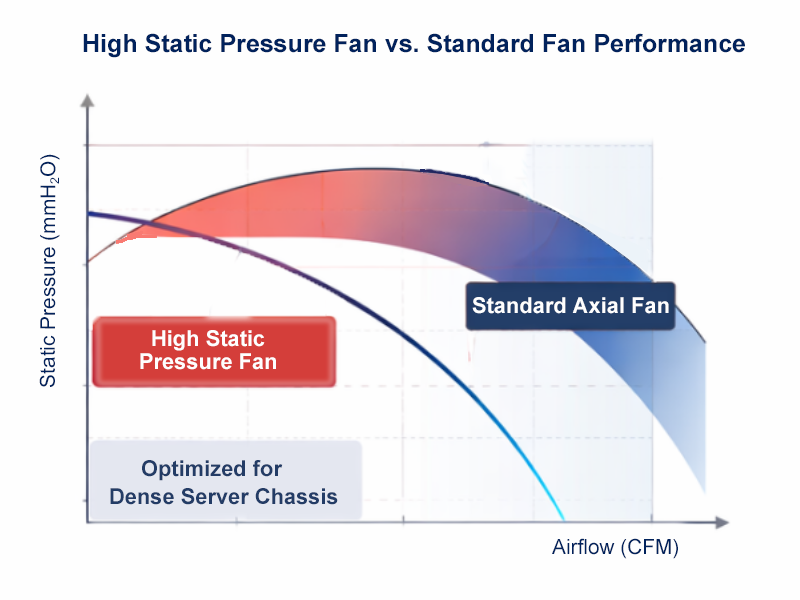

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

How We Design Thermal Paths: Airflow, Static Pressure & Fan Zones

Dec 25,2025

In high-density GPU and AI servers, cooling isn’t an accessory — it’s infrastructure.

At OneChassis, thermal design starts at the chassis level. We engineer clear front-to-rear airflow paths to align with data-center cold-aisle / hot-aisle layouts, reducing recirculation and heat buildup under sustained load.