GPU Server Case with Smart Fans Speed Control: Efficient Cooling, Smarter Performance

In the world of high-performance computing, cooling isn’t optional—it’s survival. If your GPU Server Case can't handle the heat, you're not just risking performance dips, you're inviting thermal throttling, hardware failure, and angry clients. And here's the thing: most off-the-shelf fan control setups just don’t cut it anymore, especially for systems packed with powerful GPUs.

So how do you take back control? Let’s talk smart fan speed control—based on real GPU temps, not just the CPU or motherboard. In this piece, we’ll break down why it matters, how it works, and how your next GPU Server Chassis from TopOneChassis can make thermal nightmares a thing of the past.

Why Motherboard-Based Fan Control Isn’t Enough

Here’s a common setup: you plug your fans into the motherboard, jump into BIOS, and set a “Smart Fan” curve based on CPU temperature.

Sounds fine… until your GPUs ramp up under AI or rendering workloads, and your fans stay cool and quiet—until it's too late.

Why? Because the motherboard sensors don’t know your GPUs are on fire.

Real-World Example: AI Training Workload

A system with dual RTX A6000 GPUs hit 85°C during training, while motherboard sensors reported only 55°C. The fans didn’t ramp up in time, resulting in throttling and slower job completion.

Lesson: Relying on CPU/motherboard temps for fan speed = delayed response.

Smart Fan Control Based on GPU Temperature

If your workloads are GPU-intensive, then your fans should respond to GPU temps—not anything else. With the right setup, fans can react instantly when your GPU starts heating up.

Tools That Make This Work:

| Software | OS Support | GPU-Based Fan Control | Notes |

|---|---|---|---|

| Fan Control | Windows | ✅ | Easy setup, custom curves |

| Argus Monitor | Windows | ✅ | More advanced, supports multiple sources |

| OpenBMC / iDRACFanSpeedControl | Linux/Server | ✅ | Enterprise-grade, perfect for rackmount servers |

The Case for GPU Fan Control in Enterprise Racks

In environments like data centers, AI research labs, and render farms, GPU workloads dominate. Here’s what customers using TopOneChassis products experience:

- Higher airflow efficiency: Because fans respond exactly when needed.

- Lower noise: Fans stay quiet when the system’s idle.

- Better uptime: Prevents unnecessary shutdowns due to overheating.

“We switched to a 4U TopOneChassis GPU Server Case and customized the fans to follow GPU temps directly. The result? 20% less thermal throttling and much quieter during off-peak hours.” — CTO, AI Startup in Singapore

Control Algorithms Matter: PID vs Stepwise

How you control the fan speed isn’t just about when—it’s about how. Here are the two main styles:

| Control Type | Description | Pros | Cons |

|---|---|---|---|

| Stepwise | Simple fan curves with temp ranges (e.g. 40°C = 30%, 70°C = 80%) | Easy to set up | Can be jumpy |

| PID (Proportional-Integral-Derivative) | Dynamic algorithm based on temp changes | Smooth, stable response | Slightly complex to configure |

If you're deploying TopOneChassis’s GPU Server Chassis in a production rack, PID gives you that extra level of thermal finesse.

Server-Grade Fan Control with BMC/IPMI

For enterprise deployments, you’re probably running rackmount servers with dedicated BMCs (Baseboard Management Controllers). Good news—these let you control fan speed independently of the OS.

Using solutions like OpenBMC or iDRACFanSpeedControl, admins can tie fan curves directly to GPU thermal data pulled via NVML (NVIDIA Management Library) or sensors over I2C.

Smart Fan Control Reduces Noise and Saves Power

Full-blast fans aren’t just noisy—they’re expensive.

Integration with TopOneChassis GPU Server Chassis

All this theory is great, but does the hardware support it?

If you're using TopOneChassis’s custom GPU server chassis, the answer is yes. Many of our rackmount and 4U cases come with:

- High-performance PWM fan support

- Fully customizable airflow layout

- Optional BMC/IPMI compatibility for enterprise control

- Designed with multi-GPU cooling in mind

Need a setup optimized for your AI center or video processing farm? Contact us here to get tailored chassis designs with GPU-driven cooling logic built in.

Use Case: AI Inference Deployment in Edge Rack

A TopOneChassis client deployed 30+ GPU servers for real-time inference at the edge. They customized each 4U chassis to include:

- Rear-to-front airflow with six PWM fans

- GPU-triggered fan speed logic via onboard BMC

- Redundant fan configuration for failover

Result: 27% increase in system stability and 40% drop in cooling energy cost over a 6-month span.

Final Thoughts: Why It’s Time to Get Smarter

Here’s the bottom line: if you’re still letting your motherboard decide fan speeds, you’re flying blind.

With smart GPU-based fan control, you:

- Protect your GPUs from thermal throttling

- Reduce noise

- Save power

- Extend hardware lifespan

And if you’re in the market for a serious GPU Server Case that can handle all this? TopOneChassis offers customizable, enterprise-grade chassis built to support smart cooling out of the box.

How We Build GPU Server Cases That Engineers Trust? (From Sheet Metal to Stability)

How We Build GPU Server Cases That Engineers Trust? (From Sheet Metal to Stability)

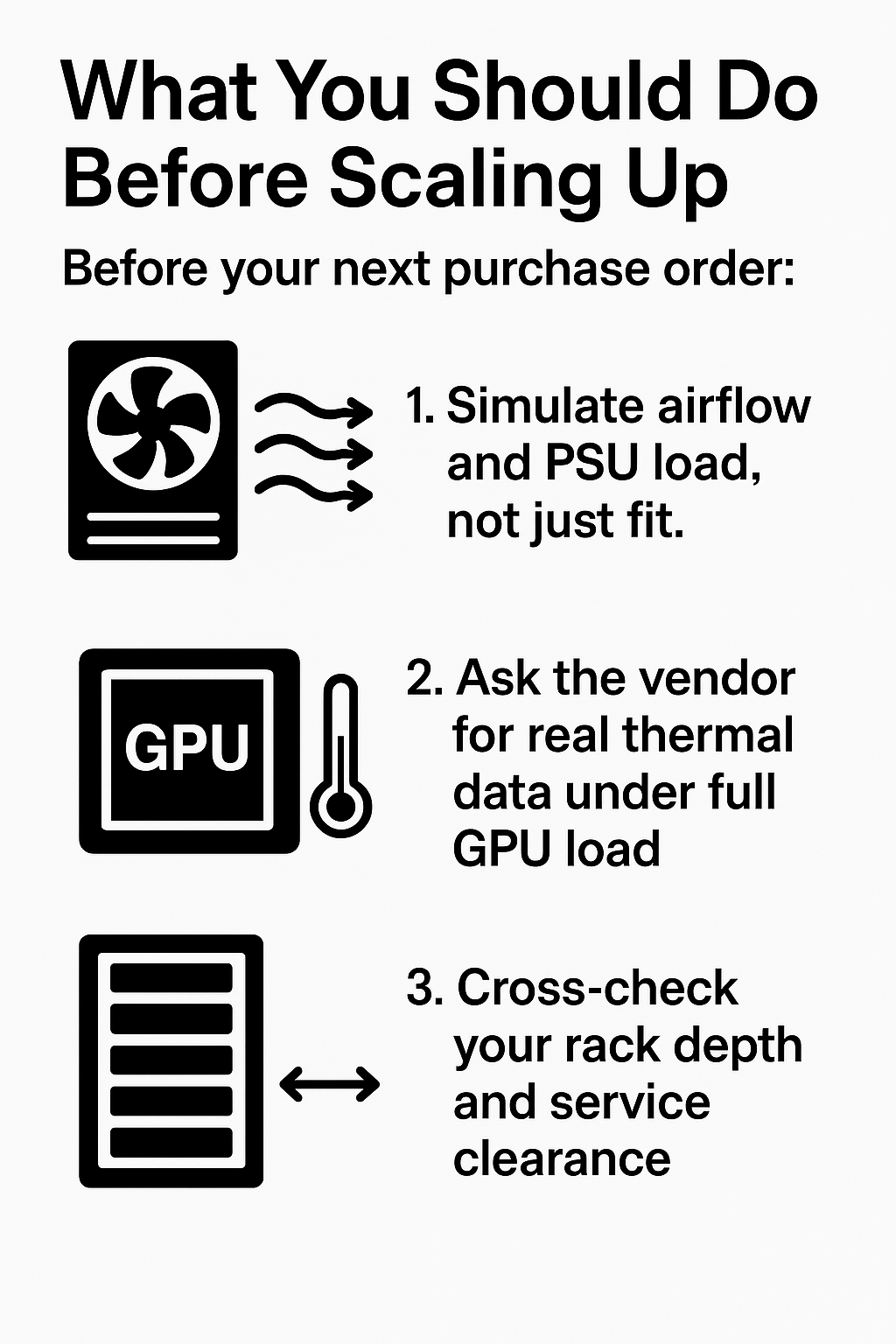

Before You Scale Up: The Server Case Compatibility Traps No One Warns You About

Before You Scale Up: The Server Case Compatibility Traps No One Warns You About

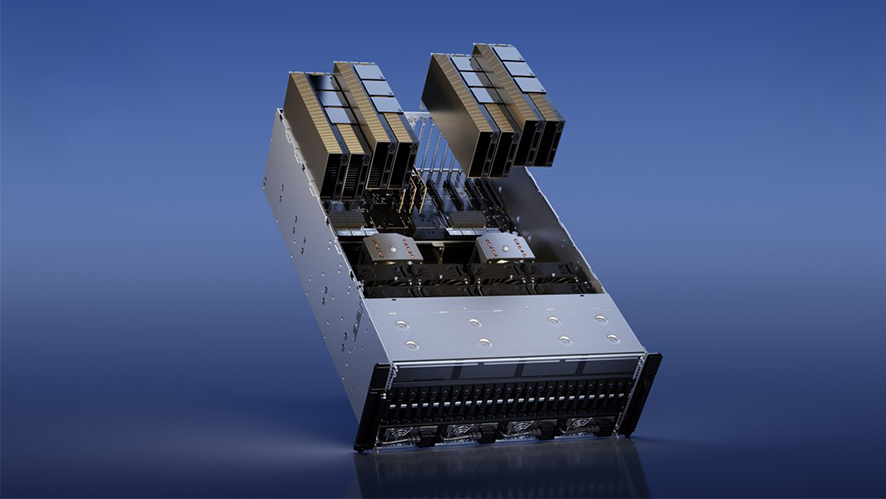

Multi-GPU Support Explained: Inside Scalable Server Case Architecture

Multi-GPU Support Explained: Inside Scalable Server Case Architecture

Double the Power, None of the Downtime: Inside GPU Server Cases with Redundant PSU Design

Double the Power, None of the Downtime: Inside GPU Server Cases with Redundant PSU Design